MONTHLY BLOG 52, FACTS AND FACTOIDS IN HISTORY

If citing, please kindly acknowledge copyright © Penelope J. Corfield (2015)

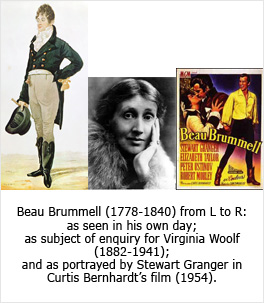

Is it a fact or a factoid? There are lots of those impostors around. Historical films perpetrate new examples daily and the web circulates them with impartial zeal. Items of information that can be verified and cross-checked with reference to other sources count as facts. But even apparently well-established truths can turn out to be no more than factoids. That useful noun was coined in 1973 by Norman Mailer when writing about Marilyn Monroe, about whom myths and legends still gather.1

| Norman Mailer (1923-2007) – maverick American author who experimented with creative literature, confessional writing, journalism, biography and non-fiction. |

A factoid is an item of information, which has gained by frequent repetition a fact-like status, even though it is actually erroneous. It may have been spawned by an outright invention or, more subtly, grown by an accretion of myth and repetition. So factoids are like lies or untruths, but they are not necessarily circulated as knowingly false. Instead these purported facts tend to be recycled again and again as non-controversial data that ‘everyone knows’. Thus factoids convey culturally-embedded information which people would like to be true or feel ought to be true. For that reason, these phoney-facts are hard to kill. And, even when slain, they may well rise and circulate again.

Eighteenth-century English history, like most periods, has generated some notable factoids of its own. One features the so-called Calendar Riots of September 1752. They have been frequently cited by historians; and one or two experts have even supplied details of their location (for example, in Bristol). I might have mentioned them in print myself, since I used to believe in their historical reality. But I didn’t commit myself publicly. That’s just as well, since there were no riots. It’s true that there was some popular grumbling and discontent in and after September 1752, when the old, lagging Julian calendar (until then standard in England and Wales) was officially jettisoned in favour of catching up with the astronomically more accurate Gregorian calendar (already in use in Scotland and across continental Europe). The gap was eleven days.

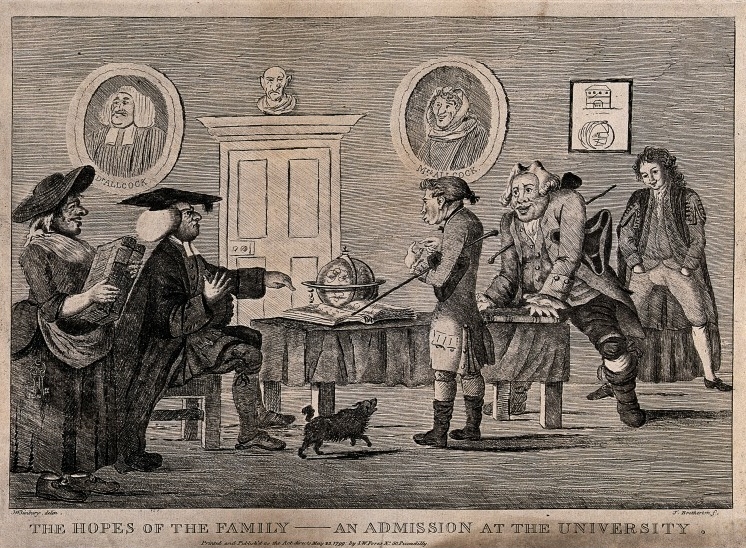

It was later myth which turned the grumbles of 1752 into riots. In one of his election satires, Hogarth included, casually amongst the chaos, an opposition poster demanding: ‘Give us our Eleven Days’. It was an irresistible formula: belligerent but anguished. Such an attitude matched with what later generations rather snobbishly considered would be the ‘natural’ response of the uneducated masses to such calendrical reforms. Expanded into ‘Give us Back our Eleven Days!’ the phrase still has resonance: we have been robbed of our time. Moreover, once embroidered into a story of riots in the early nineteenth century, the tale gained weight and encrusted detail with continued retellings.2

|

William Hogarth’s satirical Election Entertainment (1755) shows a captured placard against calendar reform, casually discarded underfoot. |

Yet, in reality, the masses in England and Wales proved quite capable of adapting to the change, which had parliamentary authority, trading convenience, congruence with Scotland, and scientific time-measurement on its side. As part of the reform process, 1 January was adopted as the start of the official year, instead of the old choice of 26 March (the quarterly Lady Day). But, in a nod to continuity, the inauguration of the tax year was left unchanged, although updated by eleven days from 26 March to 6 April (as it still remains today). Thenceforth, England and Wales adhered without difficulty to the Gregorian calendar which, once synchronised across greater Europe, continued its long journey to becoming today’s global standard. The story is interesting enough without the addition of factoids. Instead, the significant fact is that the riotous English population did not riot upon this occasion.3

Another factoid features prominently in an oversimplified version of the history of English women before women’s liberation. It is an example which is fuelled by righteous indignation against men. Or rather, not against men individually, but against the traditional legal position of men vis à vis women. It takes the form of the bald assertion that husbands ‘owned’ their wives, under common law. According to this factoid history, married women were considered as legally on a par with domestic ‘chattels’ or household goods; they were thus the property of their husbands; in effect, legally slaves. But not so.

Certainly, the independence of a married woman was legally circumscribed. Hence the eighteenth-century joke that the only truly happy female state was to be a wealthy widow. As the cynical thief-taker Peachum explains to his daughter Polly in John Gay’s Beggar’s Opera (1728): ‘The comfortable Estate of Widow-hood, is the only Hope that keeps up a Wife’s Spirits’.4

All the same, married women were not legally defined as property, capable of being bought and sold. Instead, after marriage, the legal identity of a woman (with the exception of a Queen reigning in her own right) was merged with that of her husband. Under the common law of ‘couverture’, they were one person. It was a legal fiction, which meant that a husband could not sue or be sued by his wife (though they still had to behave lawfully to one another). The law of ‘couverture’ also meant that they shared their assets and debts, unless they had some separate pre-nuptial agreement (as a considerable number of women did). Both partners, in theory at least, gained a helpmeet and the social status that came with matrimony.

| Regency print of The Proposal. |

Needless to say, in practice there were plenty of provisos. Personalities always affected the de facto balance of power within a marriage. Friends, families and servants could keep an unofficial lookout to ward against unacceptable individual behaviour. Some women also had separate pre-nuptial financial arrangements, leaving them in charge of their own money.5 And a number of married businesswomen traded in their own right, if necessary going to the equity Court of Chancery to provide a way round the rigidities of common law.6 The doctrine of matrimonial unity was potent but remained a legal fiction not a universal fact.

|

German print showing A Matrimonial Scene (1849) |

Publicly and legally, the cards always remained stacked in the husbands’ favour. To make the legal fiction work, entrenched custom dictated that it was the male who acted on behalf of the couple. Hence the tongue-in-cheek dictum attributed to many a proudly married man: ‘My wife and I are one – and I am he’.7

Given this inequity at the heart of marriage according to traditional common law, there was a very good case for the legal liberation of married women, which happened piecemeal in the course of the nineteenth century.8 But the case didn’t and doesn’t need the support of a clunking factoid. Married women were not disposable property. Their plight was compared with that of slaves by some feminist reformers. That’s more or less understandable as campaign rhetoric, even if it significantly underplays the sufferings of slaves. But the factoid should not be mistaken for fact.

Real reforms are made more difficult if the target is misrepresented. Let’s keep an eye out for pseudo-history and reject it whenever possible.9 We don’t want to fetishise ‘facts and facts alone’ since much knowledge depends upon evaluating ideas/theories/experience/analysis/assumptions/intuitions/propositions/opinions/ debates/probabilities/possibilities and all the evidence which lies between certainty and uncertainty.10 Yet, given all those complexities, we don’t need factoids muddying the water as well.

1 N. Mailer, Marilyn: A Biography (New York, 1973). The term is sometimes also used, chiefly in the USA, to refer to a trivial fact or ‘factlet’: see en.wikipedia.org/wiki/Factoid.

2 Historian Robert Poole provides an admirable analysis in R. Poole, Time’s Alteration: Calendar Reform in Early Modern England (1998), esp. pp. 1-18, 159-78; and idem, ‘“Give Us our Eleven Days!” Calendar Reform in Eighteenth-Century England’, Past & Present, 149 (1995), pp. 95-139.

3 See variously E.P. Thompson, ‘The Moral Economy of the English Crowds’, in his Customs in Common (1991), pp. 185-258, and ‘The Moral Economy Reviewed’, in ibid., pp. 259-351; J. Stevenson, Popular Disturbances in England, 1700-1870 (1979); A. Randall and A. Charlesworth (eds), Markets, Market Culture and Popular Protests in Eighteenth-Century Britain and Ireland (Liverpool, 1996); R.B. Shoemaker, The London Mob: Violence and Disorder in Eighteenth-Century England (2004); and J. Bohstedt, The Politics of Provision: Food Riots, Moral Economy and Market Transition in England, c.1550-1850 (Aldershot, 2010).

4 J. Gay, The Beggar’s Opera (1728), Act 1, sc. 10.

5 A.L. Erickson, Women and Property in Early Modern England (1993).

6 N. Phillips, Women in Business, 1700-1850 (Woodbridge, 2006).

7 E.O. Hellerstein, L.P. Hume and K.M. Offen (eds), Victorian Women: A Documentary Account of Women’s Lives in Nineteenth-Century England, France, and the United States (Stanford, Calif., 1981), Part 2, section 33, pp. 161-6: ‘“My Wife and I are One, and I am He”: The Laws and Rituals of Marriage’.

8 M.L. Shanley, Feminism, Marriage, and Law in Victorian England, 1850-95 (Princeton, 1989); A. Chernock, Men and the Making of Modern British Feminism (Stanford, Calif., 2010).

9 That’s why it’s good that these days freelance websites regularly highlight inaccuracies, omissions and inventions in historical films, before new factoids gain currency.

10 For opposition to the tyranny of facts, see Dickens’s critique of Mr Gradgrind in Hard Times (1854); L. Hudson, The Cult of the Fact (1972). With thanks to Tom Barney for a good conversation on this theme at the recent West London Local History Conference.

For further discussion, see Twitter

To read other discussion-points, please click here

To download Monthly Blog 52 please click here

One reason for the continuing trepidation was because the art of public speaking does not depend solely on the nerve of the speaker. Successful oratory depends upon an unstated but very real reciprocity. The audience has to be prepared to listen and to respond. If those present are unwilling, then the result can be anything from hostile shouting, jeers, catcalls, obscenities, the throwing of missiles – or simply turning away. Social conventions, in other words, are policed not so much by law (though it may contribute) but by widely-shared conventional beliefs.

One reason for the continuing trepidation was because the art of public speaking does not depend solely on the nerve of the speaker. Successful oratory depends upon an unstated but very real reciprocity. The audience has to be prepared to listen and to respond. If those present are unwilling, then the result can be anything from hostile shouting, jeers, catcalls, obscenities, the throwing of missiles – or simply turning away. Social conventions, in other words, are policed not so much by law (though it may contribute) but by widely-shared conventional beliefs.