MONTHLY BLOG 103, WHO KNOWS THESE HISTORY GRADUATES BEFORE THE CAMERAS AND MIKES IN TODAY’S MASS MEDIA?

If citing, please kindly acknowledge copyright © Penelope J. Corfield (2019)

| Image © Shutterstock 178056255 |

Responding to the often-asked question, What do History graduates Do? I usually reply, truthfully, that they gain employment in an immense range of occupations. But this time I’ve decided to name a popular field and to cite some high-profile cases, to give specificity to my answer. The context is the labour-intensive world of the mass media. It is no surprise to find that numerous History graduates find jobs in TV and radio. They are familiar with a big subject of universal interest – the human past – which contains something for all audiences. They are simultaneously trained to digest large amounts of disparate information and ideas, before welding them into a show of coherence. And they have specialist expertise in ‘thinking long’. That hallmark perspective buffers them against undue deference to the latest fads or fashions – and indeed buffers them against the slings and arrows of both fame and adversity.

In practice, most History graduates in the mass media start and remain behind-the-scenes. They flourish as managers, programme commissioners, and producers, generally far from the fickle bright lights of public fame. Collectively, they help to steer the evolution of a fast-changing industry, which wields great cultural clout.1

There’s no one single route into such careers, just as there’s no one ‘standard’ career pattern once there. It’s a highly competitive world. And often, in terms of personpower, a rather traditionalist one. Hence there are current efforts by UK regulators to encourage a wider diversity in terms of ethnic and gender recruiting.2 Much depends upon personal initiative, perseverance, and a willingness to start at comparatively lowly levels, generally behind the scenes. It often helps as well to have some hands-on experience – whether in student or community journalism; in film or video; or in creative applications of new social media. But already-know-it-all recruits are not as welcome as those ready and willing to learn on the job.

Generally, there’s a huge surplus of would-be recruits over the number of jobs available. It’s not uncommon for History students (and no doubt many others) to dream, rather hazily, of doing something visibly ‘big’ on TV or radio. However, front-line media jobs in the public eye are much more difficult than they might seem. They require a temperament that is at once super-alert, good-humoured, sensitive to others, and quick to respond to immediate issues – and yet is simultaneously cool under fire, not easily sidetracked, not easily hoodwinked, and implacably immune from displays of personal pique and ego-grandstanding. Not an everyday combination.

It’s also essential for media stars to have a thick skin to cope with criticism. The immediacy of TV and radio creates the illusion that individual broadcasters are personally ‘known’ to the public, who therefore feel free to commend/challenge/complain with unbuttoned intensity.

Those impressive History graduates who appear regularly before the cameras and mikes are therefore a distinctly rare breed.3 (The discussion here refers to media presenters in regular employment, not to the small number of academic stars who script and present programmes while retaining full-time academic jobs – who constitute a different sort of rare breed).

Celebrated exemplars among History graduates include the TV news journalists and media personalities Kirsty Wark (b.1955) and Laura Kuenssberg (b.1976)., who are both graduates of Edinburgh University. Both have had public accolades – Wark was elected as Fellow of the Royal Society of Edinburgh in 2017 – and both face much criticism. Kuenssberg in particular, as the BBC’s first woman political editor, is walking her way warily but effectively through the Gothic-melodrama-cum-Greek-tragedy-cum-high-farce, known as Brexit.

In a different sector of the media world, the polymathic TV and radio presenter, actor, film critic and chat-show host Jonathan Ross (b.1960) is another History graduate. He began his media career young, as a child in a TV advertisement for a breakfast cereal. (His mother, an actor, put him forward for the role). Then, having studied Modern European History at London University’s School of Slavonic & Eastern European Studies, Ross worked as a TV programme researcher behind the scenes, before eventually fronting the shows. Among his varied output, he’s written a book entitled Why Do I Say These Things? (2008). This title for his stream of reminiscences highlights the tensions involved in being a ‘media personality’. On the one hand, there’s the need to keep stoking the fires of fame; but, on the other, there’s an ever-present risk of going too far and alienating public opinion.

Similar tensions accompany the careers of two further History graduates, who are famed as sports journalists. The strain of never making a public slip must be enormous. John Inverdale (b.1957), a Southampton History graduate, and Nicky Campbell (b.1961), ditto from Aberdeen, have to cope not only with the immediacy of the sporting moment but also with the passion of the fans. After a number of years, Inverdale racked up a number of gaffes. Some were unfortunate. None fatal. Nonetheless, readers of the Daily Telegraph in August 2016 were asked rhetorically, and obviously inaccurately: ‘Why Does Everyone Hate John Inverdale?’4 That sort of over-the top response indicates the pressures of life in the public eye.

Alongside his career in media, meanwhile, Nicky Campbell used his research skills to study the story of his own adoption. His book Blue-Eyed Son (2011)5 sensitively traced his extended family roots among both Protestant and Catholic communities in Ireland. His current role as a patron of the British Association for Adoption and Fostering welds this personal experience into a public role.

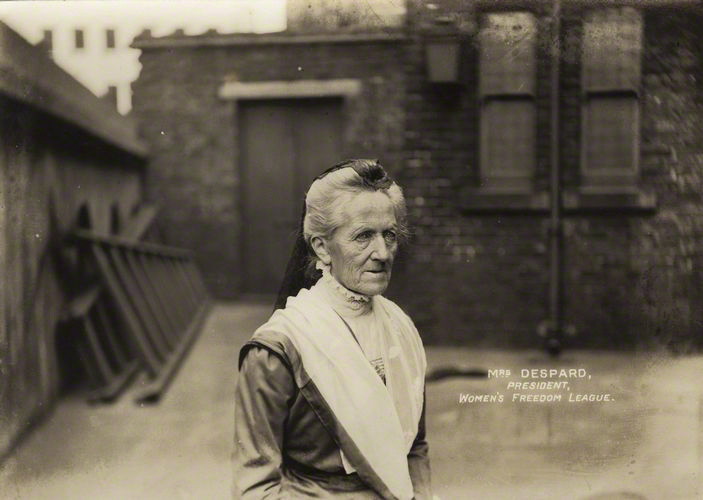

The final exemplar cited here is one of the most notable pioneers among women TV broadcasters. Baroness Joan Bakewell (b.1933) has had what she describes as a ‘rackety’ career. She studied first Economics and then History at Cambridge. After that, she experienced periods of considerable TV fame followed by the complete reverse, in her ‘wilderness years’.6 Yet her media skills, her stubborn persistence, and her resistance to being publicly patronised for her good looks in the 1960s, have given Bakewell media longevity. She is not afraid of voicing her views, for example in 2008 criticising the absence of older women on British TV. In her own maturity, she can now enjoy media profiles such as that in 2019 which explains: ‘Why We Love Joan Bakewell’.7 No doubt, she takes the commendations with the same pinch of salt as she took being written off in her ‘wilderness years’.

Bakewell is also known as an author; and for her commitment to civic engagement. In 2011 she was elevated to the House of Lords as a Labour peer. And in 2014 she became President of Birkbeck College, London. In that capacity, she stresses the value – indeed the necessity – of studying History. Her public lecture on the importance of this subject urged, in timely fashion, that: ‘The spirit of enquiring, of evidence-based analysis, is demanding to be heard.’8

What do these History graduates in front of the cameras and mikes have in common? Their multifarious roles as journalists, presenters and cultural lodestars indicate that there’s no straightforward pathway to media success. These multi-skilled individuals work hard for their fame and fortunes, concealing the slog behind an outer show of relaxed affability. They’ve also learned to live with the relentless public eagerness to enquire into every aspect of their lives, from health to salaries, and then to criticise the same. Yet it may be speculated that their early immersion in the study of History has stood them in good stead. As already noted, they are trained in ‘thinking long’. And they are using that great art to ‘play things long’ in career terms as well. As already noted, multi-skilled History graduates work in a remarkable variety of fields. And, among them, some striking stars appear regularly in every household across the country, courtesy of today’s mass media.

ENDNOTES:

1 O. Bennett, A History of the Mass Media (1987); P.J. Fourtie, (ed.), Media Studies, Vol. 1: Media History, Media and Society (2nd edn., Cape Town, 2007); G. Rodman, Mass Media in a Changing World: History, Industry, Controversy (New York, 2008); .

2 See Ofcom Report on Diversity and Equal Opportunities in Television (2018): https://www.ofcom.org.uk/__data/assets/pdf_file/0021/121683/diversity-in-TV-2018-report.PDF

3 Information from diverse sources, including esp. the invaluable survey by D. Nicholls, The Employment of History Graduates: A Report for the Higher Education Authority … (2005): https://www.heacademy.ac.uk/system/files/resources/employment_of_history_students_0.pdf; and short summary by D. Nicholls, ‘Famous History Graduates’, History Today, 52/8 (2002), pp. 49-51.

4 See https://www.telegraph.co.uk/olympics/2016/08/15/why-does-everyone-hate-john-inverdale?

5 N. Campbell, Blue-Eyed Son: The Story of an Adoption (2011).

6 J. Bakewell, interviewed by S. Moss, in The Guardian, 4 April 2010: https://www.theguardian.com/lifeandstyle/2010/apr/04/joan-bakewell-harold-pinter-crumpet

7 https://www.bbc.co.uk/programmes/articles/1xZlS9nh3fxNMPm5h3DZjhs/why-we-love-joan-bakewell.

8 J. Bakewell, ‘Why History Matters: The Eric Hobsbawm Lecture’ (2014): http://joanbakewell.com/history.html.

For further discussion, see Twitter

To read other discussion-points, please click here

To download Monthly Blog 103 please click here

Historians, who study the past, don’t undertake this exercise from some vantage point outside Time. They, like everyone else, live within an unfolding temporality. That’s very fundamental. Thus it’s axiomatic that historians, like their subjects of study, are all equally Time-bound.1

Historians, who study the past, don’t undertake this exercise from some vantage point outside Time. They, like everyone else, live within an unfolding temporality. That’s very fundamental. Thus it’s axiomatic that historians, like their subjects of study, are all equally Time-bound.1